Resonance

Immersive installation blending sound, visuals, and interactivity in a physical space.

Overview

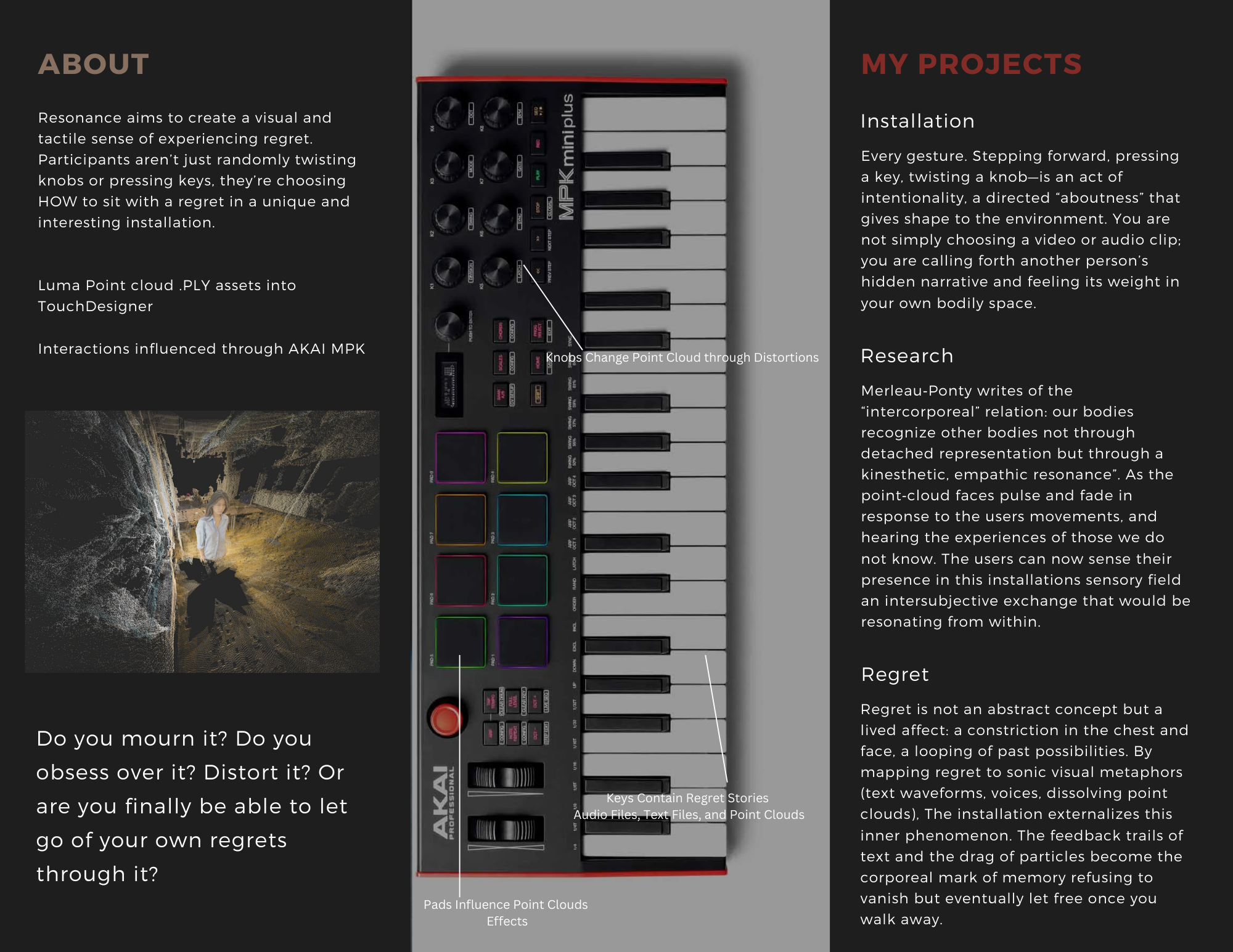

How can design and technology create moments of emotional resonance? In a world dominated by speed and distraction, this project asks participants to pause and engage with raw, vulnerable questions that are too often left unspoken.

Key Features

.png)

.png)

.png)

Testing

Users interact with the installation and provide feedback.

Assets

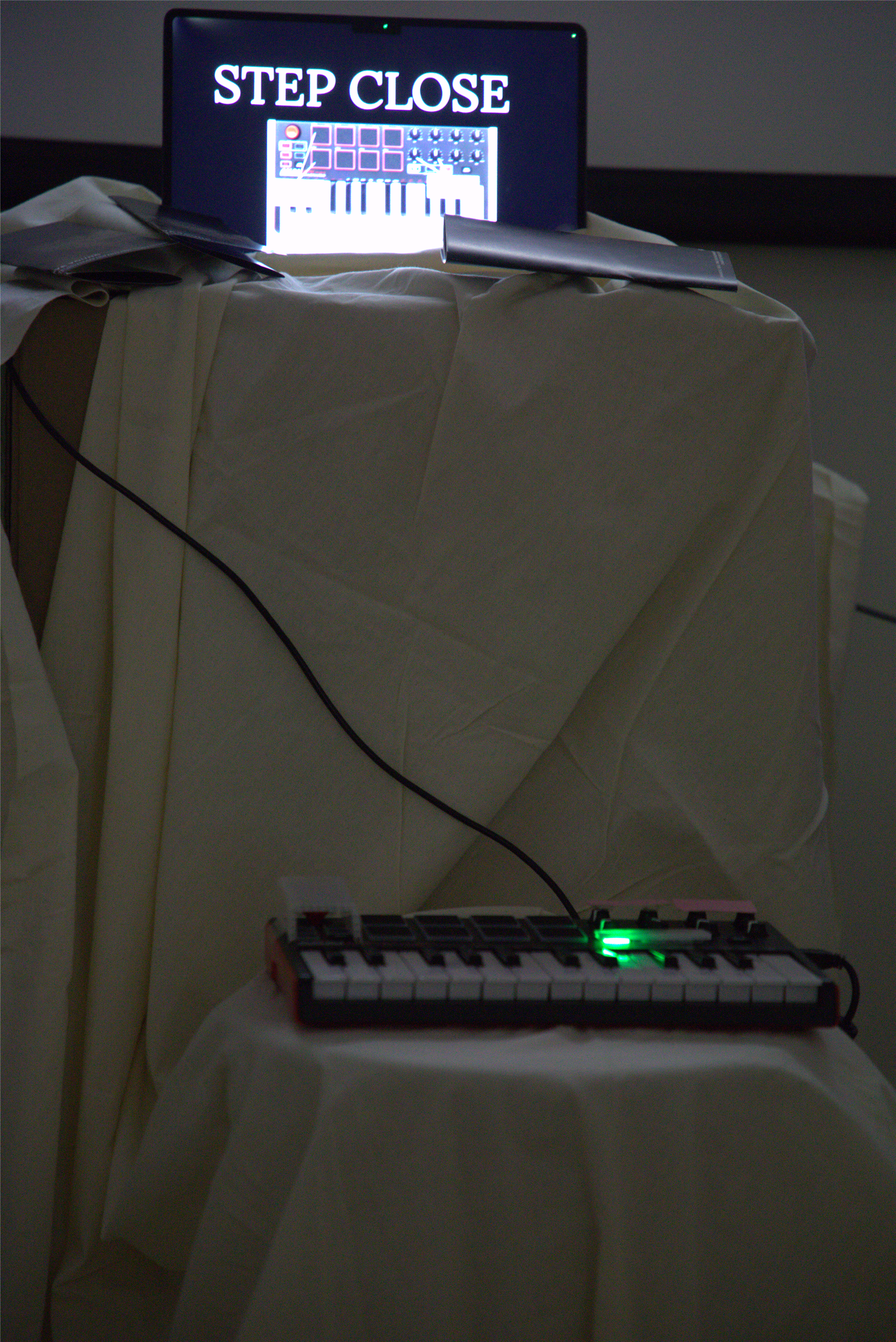

Luma .Ply files were used for 3D mesh in TouchDesigner

Process

All interactions were made in TouchDesigner

Merleau-Ponty writes of the“intercorporal relation: our bodies recognize other bodies not through detached representation but through a kinesthetic, empathic resonance”.

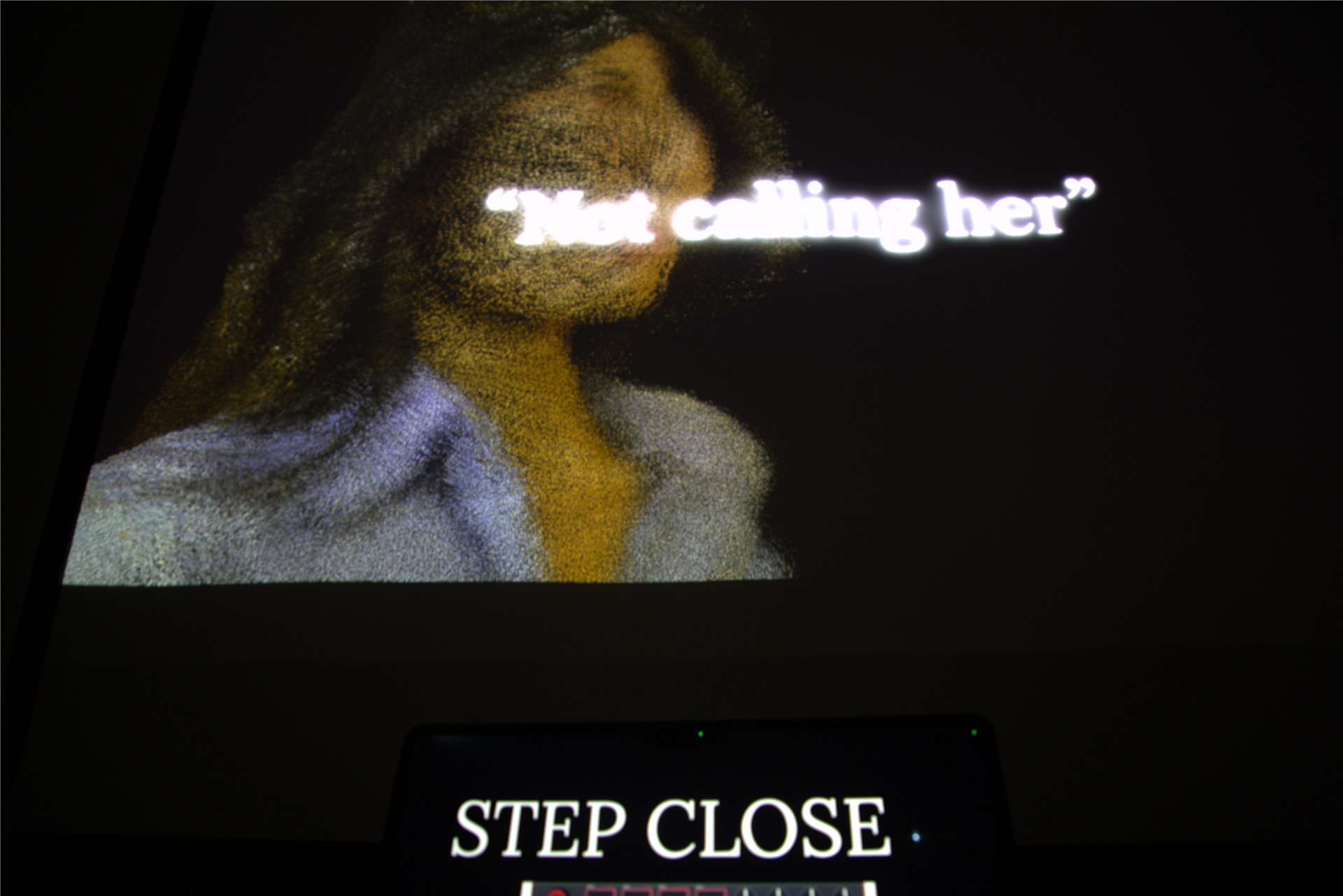

As the point cloud faces distort and fade in response to the users movements or hearing the experiences of those we do not know, this engages the users to now sense their presence in this installations once they take that walk forward. Regret is not an abstract concept but a lived affect. I see it as a constriction in the chest and face and a looping of past possibilities. By mapping regret to visual metaphors (text waveforms, voices, dissolving point clouds). The installation externalizes this inner phenomenon

Key Features

- The visuals are 3D scanned (Luma) Point Cloud files of individuals, that I imported and used as assets I can render through. The keys are grouped through the 4 Point Cloud files and mapped through python.

- The keys are grouped through the 4 Point Cloud files and mapped through python. Each key contains its own individual audio file of a persons story answering the question “What is your biggest Regret?” that I mapped using python, along with the visual point cloud and text.

- I have the knobs and pads to be the sole driver of interaction in terms of visual distortion and enabling of the filters. The way the installation “activates” is through a face detection media pipe I funneled into TouchDesigner. Once the users face is detected the installations visuals begin. When no face is detected then the question “ What is your biggest regret?” text TOP is visible

User Reactions & Impact

Engagement Rate

Of visitors actively participated after design improvements, up from 30% in initial tests.

User Satisfaction

Average rating from post-experience surveys, with particular praise for intuitive interactions.

Average Session

Participants spent significantly longer than typical gallery interactions (3-5 minutes).

Participant Feedback

"I've never experienced anything like this. The way the installation responded to my movements felt like having a conversation with light and sound."

"What impressed me most was how naturally I understood what to do. It felt intuitive, not like typical tech demos that require explanation."

Reflection & Key Learnings

Making Interactions Discoverable

Initial user testing revealed that 70% of participants stood passively, unsure how to interact with the installation. The key challenge was making the interaction intuitive while maintaining the artistic integrity of the experience. I implemented a progressive disclosure system using ambient visual cues and proximity-based activation to guide user engagement.

Future Applications

The interaction patterns developed for Resonance have since informed my approach to AR interfaces and digital product design, where discoverability remains a critical challenge.